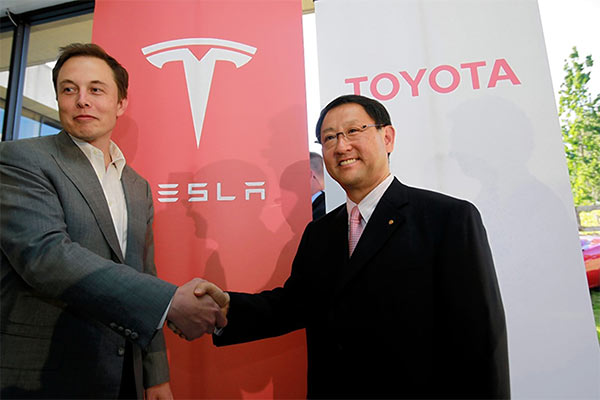

Japanese automaker Toyota adopts Tesla’s Camera-Only approach to self-driving development.

Its subsidiary, Woven Planet, will use cameras to collect data and train its self-driving system utilizing a Neural Network.

Woven Planet’s cameras will be 90 percent less expensive than the sensors it previously used.

Unlike radar or LiDAR equipment, Tesla believes cameras are crucial in developing a successful self-driving system.

Toyota subsidiary Woven Planet will utilize a camera-only approach for its self-driving project development, joining Tesla as the only other company to adopt a vision-based strategy to advance itself in the race to autonomous driving.

Toyota subsidiary Woven Planet will utilize a camera-only approach for its self-driving project development, joining Tesla as the only other company to adopt a vision-based strategy to advance itself in the race to autonomous driving.

Woven planet said it can use low-cost cameras to collect data and train its self-driving system utilizing a Neural Network, much like Tesla. The “breakthrough” technology could help drive down costs and scale the company’s self-driving efforts, the company said to Reuters.

Collecting data with self-driving cars through cameras is a reliable and accurate way to determine the advantages and shortcomings of a system.

Tesla has used this strategy with its vehicles to develop a wide array of data to improve the performance of its Autopilot and Full Self-Driving suites. Woven Planet wants to take the same approach, but it is costly and not scalable when the vehicles are outfitted with expensive sensors.

“We need a lot of data. And it’s not sufficient to just have a small amount of data that can be collected from a small fleet of very expensive autonomous vehicles,” Michael Benisch, VP of Engineering at Woven Planet, said in the report.

“Rather, we’re trying to demonstrate that we can unlock the advantage that Toyota and a large automaker would have, which is access to a huge corpus of data, but with a much lower fidelity.”

Woven Planet’s cameras will be 90 percent less expensive than the sensors it previously used, and installation is easy, which could make scaling the project relatively easy.

Tesla has always taken the approach that cameras are essentially the camera’s eyes, and radar or LiDAR equipment is not necessarily crucial in developing a successful self-driving system. Musk once said LiDAR was a “crutch” and last year, during an Earnings Call, said Tesla would benefit from a camera-only approach for self-driving.

“When your vision works, it works better than the best human because it’s like having eight cameras, it’s like having eyes in the back of your head, besides your head, and has three eyes of different focal distances looking forward. This is — and processing it at a speed that is superhuman.

There’s no question in my mind that with a pure vision solution, we can make a car that is dramatically safer than the average person,” Musk said during the call.

Toyota still plans to use sensors like LiDAR or other radar systems for robotaxis and autonomous vehicle projects, Benisch clarified. It is the “best, safest approach to developing robotaxis,” the report said.

“But in many, many years, it’s entirely possible that camera type technology can catch up and overtake some of the more advanced sensors,” Benisch added.

During the company’s most recent Earnings Call, Musk said he would be “shocked” if Tesla didn’t complete its Full Self-Driving suite by the end of the year.

News5 days ago

News5 days ago

News1 week ago

News1 week ago

News1 week ago

News1 week ago

News6 days ago

News6 days ago

News6 days ago

News6 days ago

News5 days ago

News5 days ago

Car Facts4 days ago

Car Facts4 days ago

News1 week ago

News1 week ago